Whenever my LAN port switch restart my both the HA firewall restart why it happen

This thread was automatically locked due to age.

Important note about SSL VPN compatibility for 20.0 MR1 with EoL SFOS versions and UTM9 OS. Learn more in the release notes.

Hi Dev,

You can find the HA log files in the /log directory through the advanced shell. To access log files through SSH, do as follows:

cd /loglscat LOGFILENAMEThe below table describes the four relevant log files for HA.

| Log file | Description |

|---|---|

| msync.log | HA synchronization service. |

| ctsyncd.log | Conntrack synchronization service. |

| applog.log | HA configuration and status updates. |

| csc.log | Central service, which manages all services. |

Regards

"Sophos Partner: Networkkings Pvt Ltd".

If a post solves your question please use the 'Verify Answer' button.

I initially understood the problem differently too...

But:

If a monitored interface goes “down”, the firewall goes into “FAILED” status and stops processing traffic.

Unfortunately, this also happens at the same time with the slave if both (master and slave) have a "monitored link down".

...unlike the SG firewall which keeps the last "working" node active

Dirk

Systema Gesellschaft für angewandte Datentechnik mbH // Sophos Platinum Partner

Sophos Solution Partner since 2003

If a post solves your question, click the 'Verify Answer' link at this post.

So, i was looking into this, as i did not remember this issue.

My cluster did the same approach to this behavior like UTM did.

I brought both monitoring ports down (at the same time) on both appliances.

No reboot of Node1 and no Reboot of Node2.

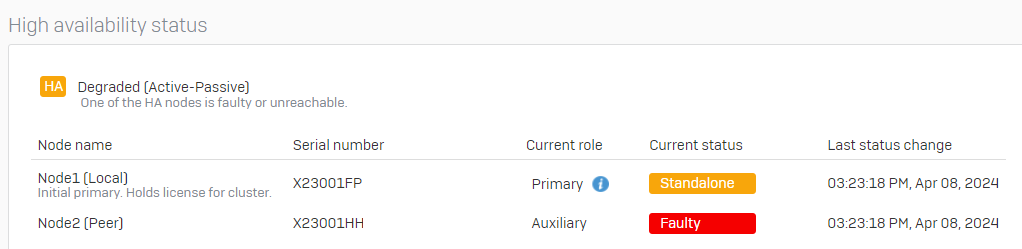

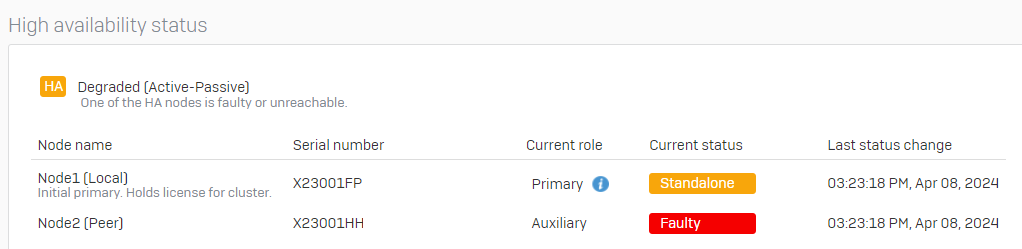

Node2 went into "Faulty". Node1 went into "Standalone". Traffic still processed by Node1 and no interruption in the network.

As soon as i brought both links up: Reboot of Node2. Node1 stays online as Standalone. No interruption in the network.

Node2 will get back to the cluster after the reboot and everything is working.

So i am not seeing any kind of "issue here".

Just to make sure, i did another test: I killed the Port1 (monitoring port) of AUX first. It will go into Faulty (no reboot). Then i waited some seconds and also brought Port1 down on the primary. Still the primary remains the standalone and waits.

__________________________________________________________________________________________________________________

So, i was looking into this, as i did not remember this issue.

My cluster did the same approach to this behavior like UTM did.

I brought both monitoring ports down (at the same time) on both appliances.

No reboot of Node1 and no Reboot of Node2.

Node2 went into "Faulty". Node1 went into "Standalone". Traffic still processed by Node1 and no interruption in the network.

As soon as i brought both links up: Reboot of Node2. Node1 stays online as Standalone. No interruption in the network.

Node2 will get back to the cluster after the reboot and everything is working.

So i am not seeing any kind of "issue here".

Just to make sure, i did another test: I killed the Port1 (monitoring port) of AUX first. It will go into Faulty (no reboot). Then i waited some seconds and also brought Port1 down on the primary. Still the primary remains the standalone and waits.

__________________________________________________________________________________________________________________

Hi LuCar,

This behaviour would be great and what I expected, but i bring down a network with rebooting a small (but monitored) DMZ-Switch. The whole internal routing stopped.

Compare ticket 07015414

"Hello Dirk,

I clarified during the call that in the event that any of the primary appliance's monitoring ports are down, the primary appliance will enter a fault state and the auxiliary appliance will assume control of the current traffic. If both appliances' monitor ports fail, both appliances will enter a faulty state and during that time, traffic will not be entertained"

"We can see that the Monitoring Port for the Primary was down, and as a result, it entered the FAULT condition.

If we check the AUX device at the same time, Monitoring Port was down.

Due to the Monitoring Port going down, both appliances were in an FAULT condition when the problem occurred."

I asked about 10 people at Sophos. Everyone said this was normal/expected behaviour.

I actually didn't want to believe that.

Dirk

Systema Gesellschaft für angewandte Datentechnik mbH // Sophos Platinum Partner

Sophos Solution Partner since 2003

If a post solves your question, click the 'Verify Answer' link at this post.

Basically, Sophos Firewall will try to stay online 24/7.

Could be related to the general firmware update question.

https://community.sophos.com/kb/en-us/122816

Many points are covered by this KBA:

https://community.sophos.com/kb/en-us/123174

As well as this:

https://community.sophos.com/kb/en-us/126722

And also verify switch configuration as switch misconfiguration cause the issue.

Regards

"Sophos Partner: Networkkings Pvt Ltd".

If a post solves your question please use the 'Verify Answer' button.

There is an old statement about this behavior, from 2018.

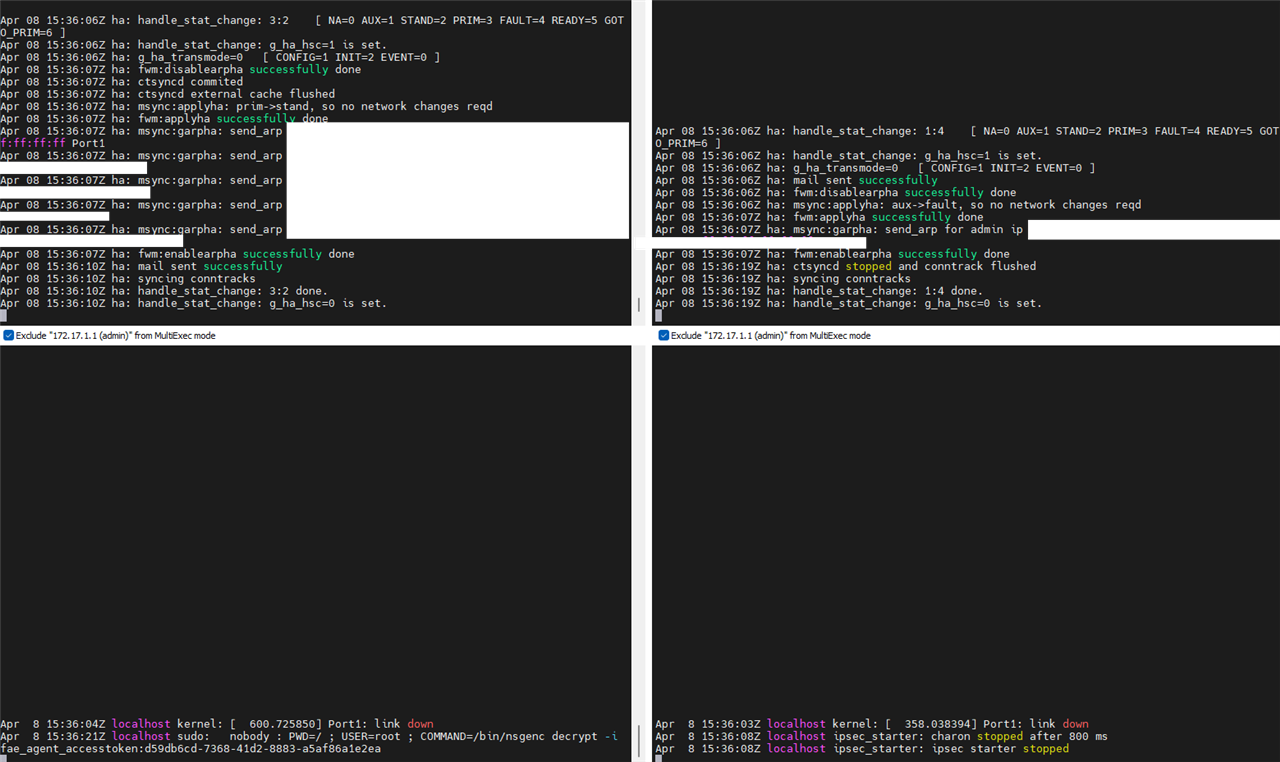

What i now did: Reboot of a Switch, which is connected to the Monitoring Port1 on both appliances.

You see on the left side the Primary. On the Right side the AUX.

To me, this looks totally fine. I did it with a "switch reboot" as well as i tried it with a "ifconfig down" command within SFOS itself.

I did this test now 3 times, everytime with the same results.

__________________________________________________________________________________________________________________