This thread was automatically locked due to age.

Important note about SSL VPN compatibility for 20.0 MR1 with EoL SFOS versions and UTM9 OS. Learn more in the release notes.

Important note about SSL VPN compatibility for 20.0 MR1 with EoL SFOS versions and UTM9 OS. Learn more in the release notes.

Hi Erick, kindley see the resalts below:

XGS4300_AM02_SFOS 19.5.3 MR-3-Build652 HA-Primary# df -kh

Filesystem Size Used Available Use% Mounted on

none 1.5G 12.6M 1.4G 1% /

none 15.6G 384.0K 15.6G 0% /dev

none 15.6G 1.7G 13.9G 11% /tmp

none 15.6G 14.8M 15.6G 0% /dev/shm

/dev/boot 126.2M 43.5M 80.0M 35% /boot

/dev/mapper/mountconf

954.9M 82.2M 868.8M 9% /conf

/dev/content 22.3G 482.7M 21.9G 2% /content

/dev/var 179.3G 179.3G 0 100% /var

-------------------------------------------------------------------------------------------------------------------

XGS4300_AM02_SFOS 19.5.3 MR-3-Build652 HA-Primary# ls -lahr /var/cores

-rw------- 1 root 0 133.9K Mar 3 03:54 f6e29fab-d721-4345-056cac b4-0f420ccc.dmp

-rw------- 1 root 0 133.9K Mar 2 20:31 f680aa6b-61e6-44fe-d76754 af-f0578015.dmp

-rw------- 1 root 0 133.9K Mar 3 08:53 f5a118b1-b524-496c-aaf070 8b-d3ca5a36.dmp

-rw------- 1 root 0 133.9K Mar 3 10:46 f2d017ae-3638-432a-a531ef 83-2e965352.dmp

-rw------- 1 root 0 133.9K Mar 3 10:01 f1f4f007-0129-4eb3-789b1e 95-e7a5b0ab.dmp

-rw------- 1 root 0 133.9K Mar 3 11:19 f177bf94-8382-4876-5aa4ac 9b-fff504a3.dmp

-rw------- 1 root 0 133.9K Mar 3 02:17 ea135b88-03fd-4cac-ebe25d 90-ca004e48.dmp

-rw------- 1 root 0 133.9K Mar 3 02:50 e3d0f75e-2d7d-451e-28e78f ac-252e4adb.dmp

-rw------- 1 root 0 133.9K Mar 3 07:58 e35a94b0-6184-4989-53b0f0 8e-3712d64e.dmp

-rw------- 1 root 0 133.9K Mar 3 08:30 e3243ae2-a8bb-4699-f4efbc a1-7e480f0e.dmp

-rw------- 1 root 0 133.9K Mar 3 05:11 de1a5970-a37a-4201-25d629 8a-97d3b2e5.dmp

-rw------- 1 root 0 133.9K Mar 2 21:44 d9d09f01-02f7-46e3-90e75d 88-2edaa8a3.dmp

-rw------- 1 root 0 133.9K Mar 2 23:20 d74ee20f-db38-44d1-0059d9 ba-6c513037.dmp

-rw------- 1 root 0 51.5M Mar 7 10:45 core.garner

-rw------- 1 root 0 1006.1M Feb 19 15:57 core.EpollWorker_09

-rw------- 1 root 0 1.5G Feb 15 10:04 core.EpollWorker_00

-rw------- 1 root 0 133.9K Mar 3 11:50 cec08087-ffcf-4c8b-286321 bd-9eff359e.dmp

-rw------- 1 root 0 133.9K Mar 3 02:06 cb223edc-a704-462e-420b8e 8c-80900d66.dmp

-rw------- 1 root 0 137.9K Mar 2 22:05 c9075783-9f83-4cca-e16d35 a3-7f844e95.dmp

-rw------- 1 root 0 133.9K Mar 3 01:55 c5252a65-f808-4332-7a0610 bd-89ed9695.dmp

-rw------- 1 root 0 133.9K Mar 2 22:16 c4d3c556-462b-4c17-e5b2d7 8b-52981f2f.dmp

-rw------- 1 root 0 133.9K Mar 3 10:24 c2b6962e-3d1d-4cfb-472822 9e-8ebe2690.dmp

-rw------- 1 root 0 133.9K Mar 2 20:20 c1853426-d314-4e64-f39b94 a1-934702a2.dmp

-rw------- 1 root 0 133.9K Mar 3 02:28 c159abb2-56a8-4779-1e9252 86-08b01c72.dmp

-rw------- 1 root 0 133.9K Mar 3 10:36 b1b05ec8-d18f-474d-13cb1d 84-99bc922d.dmp

-rw------- 1 root 0 133.9K Mar 3 00:19 b02624a0-e9ce-467f-ce85ec b5-e78f8766.dmp

-rw------- 1 root 0 133.9K Mar 3 03:11 afd6d155-562d-4b1d-5ab8ac 94-5d7c59f7.dmp

-rw------- 1 root 0 133.9K Mar 3 06:16 aee22f36-0453-44ec-840d58 ac-d7bdaed8.dmp

-rw------- 1 root 0 133.9K Mar 3 07:35 ac27aab0-cd78-40e7-3da2a0 94-d5187711.dmp

-rw------- 1 root 0 133.9K Mar 3 03:22 ab729263-1954-48b5-859d6c ae-02079e2d.dmp

-rw------- 1 root 0 133.9K Mar 3 12:01 a852598c-eaaa-41f5-73e2b1 a8-b79a6dc8.dmp

-rw------- 1 root 0 133.9K Mar 3 06:05 a797fabe-626f-4732-29d606 8c-80fcbb2b.dmp

-rw------- 1 root 0 133.9K Mar 2 23:52 a66f7952-d197-4ef8-c93196 ac-760db06d.dmp

-rw------- 1 root 0 133.9K Mar 3 07:12 a4ea1740-c39d-4ad5-9ff399 8a-aa62fe62.dmp

-rw------- 1 root 0 133.9K Mar 3 04:50 a3853597-d738-4b5a-df4198 be-16f7c43a.dmp

-rw------- 1 root 0 133.9K Mar 3 05:54 a1a5b8f8-b03e-4d5b-d6f7b6 af-aa068ac2.dmp

-rw------- 1 root 0 133.9K Mar 2 23:10 966ab09b-8afe-4669-bfc993 b0-c219ad82.dmp

-rw------- 1 root 0 133.9K Mar 3 07:00 96594c22-a5eb-4da6-592af9 a9-699bb96b.dmp

-rw------- 1 root 0 133.9K Mar 3 09:50 95de6a9f-7c50-4a51-9c3108 98-acdd2d0d.dmp

-rw------- 1 root 0 133.9K Mar 3 06:27 949718c9-1a7d-426f-77c4da 87-b820da9d.dmp

-rw------- 1 root 0 133.9K Mar 3 11:40 933b8a05-02c6-48b5-2eaa3c 85-5929a59f.dmp

-rw------- 1 root 0 133.9K Mar 3 11:29 93342ae1-455f-464b-165aa3 b8-7400a329.dmp

-rw------- 1 root 0 133.9K Mar 3 09:38 91e32261-9b92-426f-c3ae32 83-49b4f3af.dmp

-rw------- 1 root 0 133.9K Mar 2 21:34 8eefea88-97e7-40cb-cbbc8f b3-9b945c0b.dmp

-rw------- 1 root 0 133.9K Mar 2 20:41 89a8af0c-d0a9-4012-7d6730 8a-611f5c5c.dmp

-rw------- 1 root 0 133.9K Mar 3 05:22 874007c2-ad84-47a9-28279d ac-9ac66561.dmp

-rw------- 1 root 0 133.9K Mar 3 07:24 859d19b2-de27-4ef8-f96383 bf-3413d140.dmp

-rw------- 1 root 0 133.9K Mar 3 06:49 82a68c53-c2e0-4bcb-71fb77 b0-bb372276.dmp

-rw------- 1 root 0 133.9K Mar 3 01:13 7fe7bc3a-4e78-40f8-4aced4 b0-728a1a35.dmp

-rw------- 1 root 0 133.9K Mar 2 21:02 7990781d-be0c-4e9a-1d9d21 97-e9d2bf7f.dmp

-rw------- 1 root 0 133.9K Mar 3 08:41 7784bdfb-dc93-48ed-86e04f b1-1a011fae.dmp

-rw------- 1 root 0 133.9K Mar 3 09:27 775f6a26-947e-491d-cc1421 a3-620d2e89.dmp

-rw------- 1 root 0 133.9K Mar 2 23:42 741de3d6-0781-44f9-00bc5c 9b-158070a8.dmp

-rw------- 1 root 0 133.9K Mar 3 08:08 73546fbb-03bd-4a4f-fdfbf9 a5-75e1a9b1.dmp

-rw------- 1 root 0 133.9K Mar 3 10:12 71b532d9-1bcb-4ac4-1ab719 9d-90a64eda.dmp

-rw------- 1 root 0 137.9K Mar 3 05:00 6d88688c-b2c4-46ac-86d4d6 ac-65be9372.dmp

-rw------- 1 root 0 133.9K Mar 3 09:16 6d2a7205-ec1c-42ce-256c73 ad-911a716f.dmp

-rw------- 1 root 0 133.9K Mar 2 23:31 68b60c09-fa15-4e21-84c772 80-3c1bdddf.dmp

-rw------- 1 root 0 133.9K Mar 3 00:03 62577ebd-c131-4f9e-ba3a0f a5-aadda394.dmp

-rw------- 1 root 0 133.9K Mar 3 06:38 5e9651cf-6a3d-4d0f-643a51 80-378d40bb.dmp

-rw------- 1 root 0 133.9K Mar 2 21:23 58f2e124-9280-4ef7-6d7e08 b5-10f117f9.dmp

-rw------- 1 root 0 133.9K Mar 3 04:39 5878b3f5-1d61-489d-d4cdcf b2-307f777a.dmp

-rw------- 1 root 0 133.9K Mar 2 22:59 50c3d460-d9ae-4154-b6d76d 91-7e9f53d8.dmp

-rw------- 1 root 0 133.9K Mar 3 04:16 4ceed7c2-e7d8-4c2a-6f3239 b1-5a1d2ccf.dmp

-rw------- 1 root 0 133.9K Mar 3 04:05 4bb9d800-9e2f-495c-12f4d6 9d-8c3b0989.dmp

-rw------- 1 root 0 133.9K Mar 2 22:27 4a827508-e58f-4db1-c93b73 b4-e16ad839.dmp

-rw------- 1 root 0 133.9K Mar 3 03:32 49cfe1ee-aab0-4335-2c3d9f b7-52e4dad3.dmp

-rw------- 1 root 0 133.9K Mar 2 22:48 480cfb7b-2727-4540-06d83e b8-88a2bbdd.dmp

-rw------- 1 root 0 133.9K Mar 3 04:28 44b2e14b-aae1-4892-1155fb b6-0bf34cbf.dmp

-rw------- 1 root 0 133.9K Mar 3 05:32 3e27602d-ea12-4242-cbc3ea 88-e02502a9.dmp

-rw------- 1 root 0 133.9K Mar 3 11:08 33a24bec-2e1b-43b4-77f9fa 93-4cf5c328.dmp

-rw------- 1 root 0 133.9K Mar 2 20:51 30f6f4f0-1b3a-4820-42cc8c b9-9c6030d9.dmp

-rw------- 1 root 0 133.9K Mar 2 21:12 30ed183b-e581-4d33-1329ef 9a-d8fa807f.dmp

-rw------- 1 root 0 133.9K Mar 3 05:43 2bbdc318-01fb-44c5-93686e a9-3292249e.dmp

-rw------- 1 root 0 133.9K Mar 3 09:04 28d73bb1-780f-458a-948895 b3-d7e32421.dmp

-rw------- 1 root 0 133.9K Mar 3 01:24 24f0809b-d064-4880-bac622 8b-68ed7982.dmp

-rw------- 1 root 0 133.9K Mar 3 01:34 2132b2a5-5496-4c4a-4dc3bb 8f-81f915e4.dmp

-rw------- 1 root 0 133.9K Mar 3 07:47 212ea869-8c68-40b1-5cde41 92-a7e61dd0.dmp

-rw------- 1 root 0 133.9K Mar 3 10:57 1c4ad959-1736-4512-2e7647 be-a391e949.dmp

-rw------- 1 root 0 133.9K Mar 3 03:01 1b27d4cd-cf4a-4d72-bdac0a b2-e25167b0.dmp

-rw------- 1 root 0 133.9K Mar 3 08:19 17628001-6772-4bc9-1c8e7d 93-8e269d80.dmp

-rw------- 1 root 0 133.9K Mar 3 02:39 1709dfdb-11eb-4848-48c20e 8a-3de81cc8.dmp

-rw------- 1 root 0 133.9K Mar 2 22:37 1509f324-c7db-41ed-9c8675 b8-a2ca9481.dmp

-rw------- 1 root 0 133.9K Mar 3 03:43 10c898aa-c75f-4189-c90ee9 b8-9f3e3121.dmp

-rw------- 1 root 0 133.9K Mar 2 21:55 0a367260-f5f5-469c-b3069f b8-b6d92625.dmp

-rw------- 1 root 0 133.9K Mar 3 01:45 0a2f596f-3011-4433-8546dc b8-541a48a4.dmp

drwxr-xr-x 41 root 0 4.0K Mar 9 00:00 ..

drwxrwxrwt 2 root 0 4.0K Mar 3 12:01 .

------------------------------------------------------------------------------------------------------------------

XGS4300_AM02_SFOS 19.5.3 MR-3-Build652 HA-Primary# tail -n 50 /log/garner.log

ERROR Mar 05 22:31:52Z [4117306176]: do_curl_operation_on_file[CentralReporting] File size is 0 and so it will not be sent to central.

ERROR Mar 05 22:31:52Z [4117306176]: do_curl_operation_on_file[CentralReporting] File size is 0 and so it will not be sent to central.

ERROR Mar 05 22:31:52Z [4117306176]: do_curl_operation_on_file[CentralReporting] File size is 0 and so it will not be sent to central.

ERROR Mar 05 22:31:52Z [4117306176]: do_curl_operation_on_file[CentralReporting] File size is 0 and so it will not be sent to central.

ERROR Mar 05 22:31:52Z [4117306176]: do_curl_operation_on_file[CentralReporting] File size is 0 and so it will not be sent to central.

ERROR Mar 05 22:31:52Z [4117306176]: do_curl_operation_on_file[CentralReporting] File size is 0 and so it will not be sent to central.

ERROR Mar 05 22:31:52Z [4117306176]: do_curl_operation_on_file[CentralReporting] File size is 0 and so it will not be sent to central.

ERROR Mar 05 22:31:52Z [4117306176]: do_curl_operation_on_file[CentralReporting] File size is 0 and so it will not be sent to central.

ERROR Mar 05 22:32:01Z opsyslog connect success

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: write() failed during handshake: Broken pipe

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: client sent invalid ident: z▒▒e on fd: 38

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: client sent invalid ident: z▒▒e on fd: 40

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: client sent invalid ident: z▒▒e on fd: 42

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: client sent invalid ident: |▒▒e on fd: 42

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: client sent invalid ident: z▒▒e on fd: 43

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: client sent invalid ident: z▒▒e on fd: 45

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: client sent invalid ident: z▒▒e on fd: 45

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: client sent invalid ident: z▒▒e on fd: 46

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: client sent invalid ident: |▒▒e on fd: 46

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: client sent invalid ident: z▒▒e on fd: 47

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: client sent invalid ident: ▒▒▒e▒ on fd: 47

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: client sent invalid ident: z▒▒e▒ on fd: 48

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: client sent invalid ident: |▒▒e on fd: 48

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: client sent invalid ident: |▒▒e on fd: 49

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: client sent invalid ident: {▒▒e on fd: 49

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: client sent invalid ident: {▒▒e on fd: 50

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: client sent invalid ident: z▒▒e on fd: 52

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: client sent invalid ident: {▒▒e on fd: 52

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: client sent invalid ident: {▒▒e on fd: 53

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: write() failed during handshake: Broken pipe

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: write() failed during handshake: Broken pipe

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: write() failed during handshake: Broken pipe

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: write() failed during handshake: Broken pipe

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: write() failed during handshake: Broken pipe

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: write() failed during handshake: Broken pipe

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: write() failed during handshake: Broken pipe

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: write() failed during handshake: Broken pipe

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: write() failed during handshake: Broken pipe

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: write() failed during handshake: Broken pipe

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: write() failed during handshake: Broken pipe

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: write() failed during handshake: Broken pipe

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: write() failed during handshake: Broken pipe

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: write() failed during handshake: Broken pipe

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: write() failed during handshake: Broken pipe

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: write() failed during handshake: Broken pipe

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: write() failed during handshake: Broken pipe

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: write() failed during handshake: Broken pipe

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: write() failed during handshake: Broken pipe

ERROR Mar 05 22:45:31Z [4152295168]: handle_accept: write() failed during handshake: Broken pipe

Your firewall filled up.

Likely because of reporting.

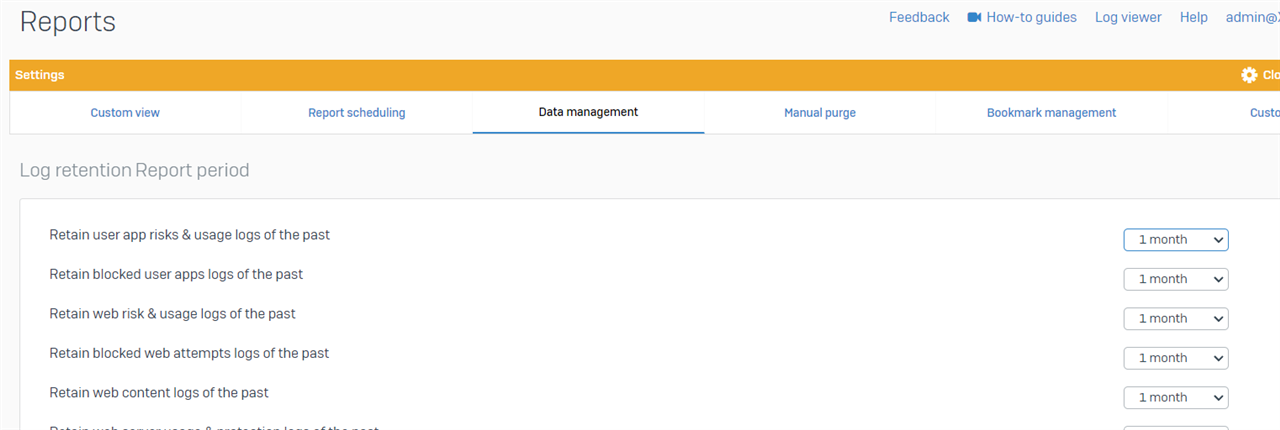

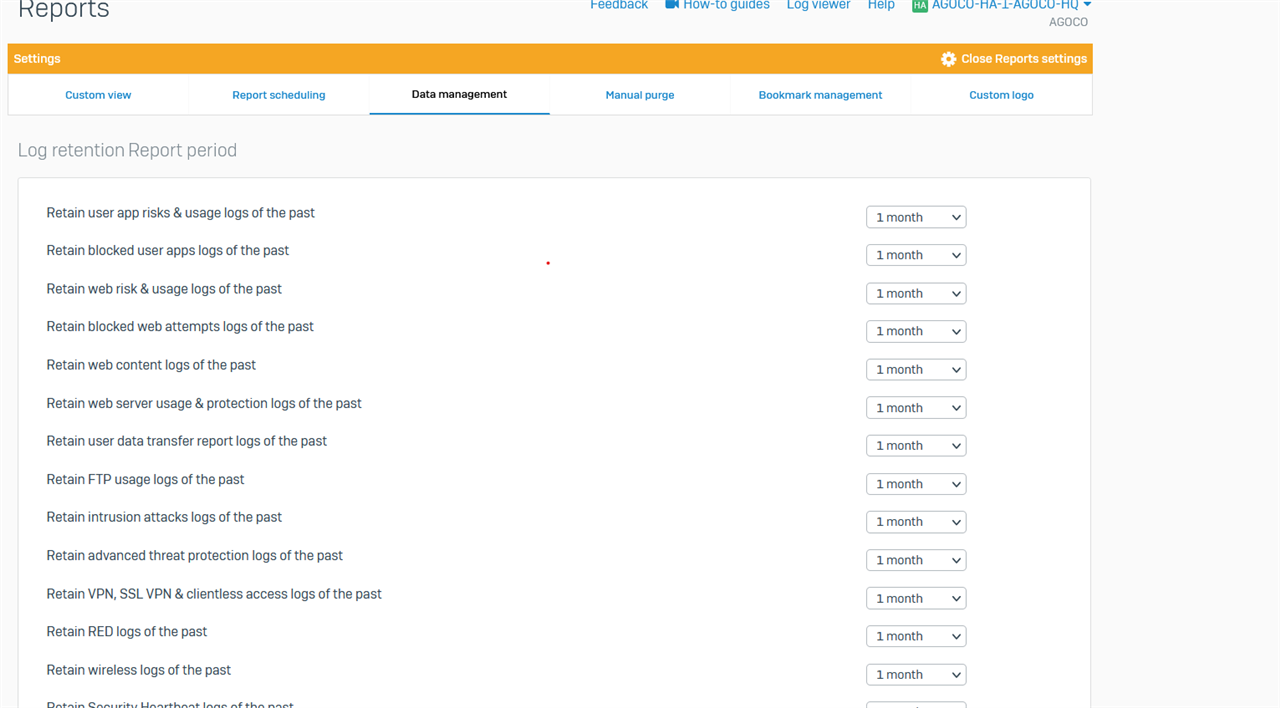

Could you link us your Reporting Data Management Page?

It is likely you have to purge data on manual purge page as well.

And then adjust the data logging as the disk on your firewall is not big enough for the data.

You could look into CFR (Central firewall reporting) to expand your reports to Central instead

__________________________________________________________________________________________________________________

Hi Erick, kindley see the pic below

I changed the duration from 6 Months to 1 Month

also i restarted the service but without success:

XGS4300_AM02_SFOS 19.5.3 MR-3-Build652 HA-Primary# service garner:restart -ds sync

XGS4300_AM02_SFOS 19.5.3 MR-3-Build652 HA-Primary# df -kh

Filesystem Size Used Available Use% Mounted on

none 1.5G 12.6M 1.4G 1% /

none 15.6G 384.0K 15.6G 0% /dev

none 15.6G 1.7G 13.9G 11% /tmp

none 15.6G 14.8M 15.6G 0% /dev/shm

/dev/boot 126.2M 43.5M 80.0M 35% /boot

/dev/mapper/mountconf

954.9M 82.4M 868.6M 9% /conf

/dev/content 22.3G 482.7M 21.9G 2% /content

/dev/var 179.3G 179.3G 0 100% /var

Did you manually purge in webadmin some content from the data base?

__________________________________________________________________________________________________________________

Did you manually purge in webadmin some content from the data base?

__________________________________________________________________________________________________________________

yes I purged all data on manual purge page

Does the df -h /var Partition slowly decrease or is it still 100%?

If not, you can purge all data from the Shell: https://docs.sophos.com/nsg/sophos-firewall/20.0/Help/en-us/webhelp/onlinehelp/CommandLineHelp/DeviceManagement/index.html#show-firmwares

__________________________________________________________________________________________________________________

XGS4300_AM02_SFOS 19.5.3 MR-3-Build652 HA-Primary# df -h /var

Filesystem Size Used Available Use% Mounted on

/dev/var 179.3G 179.3G 0 100% /var

Hi Erick, the service comes back after the repooting

/dev/var 179.3G 14.5G 164.8G 8% /var

Thanks a lot