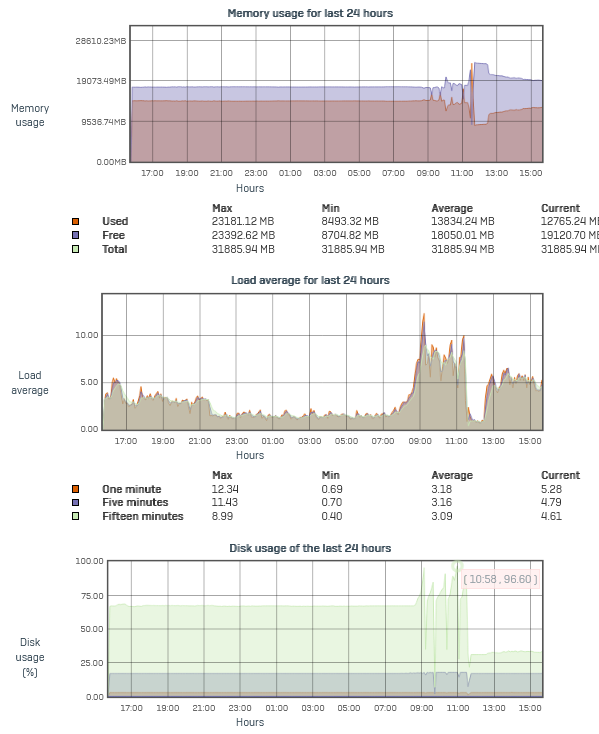

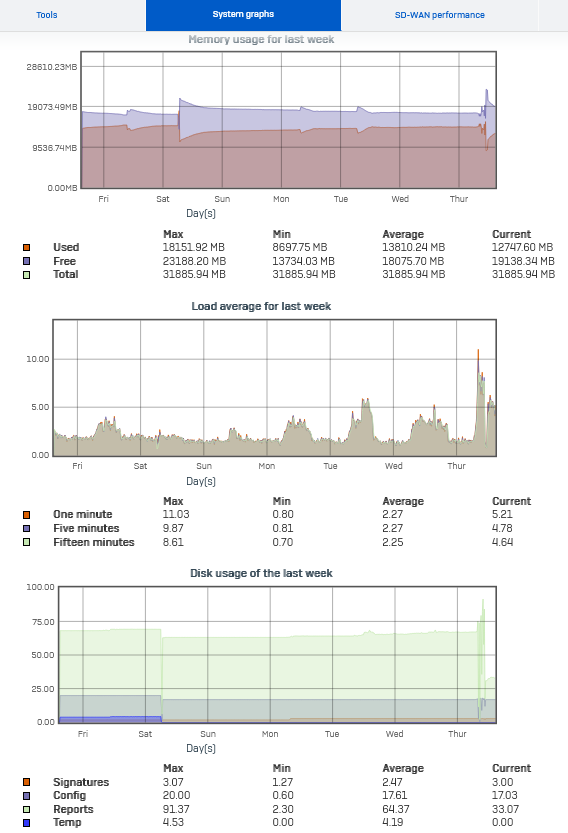

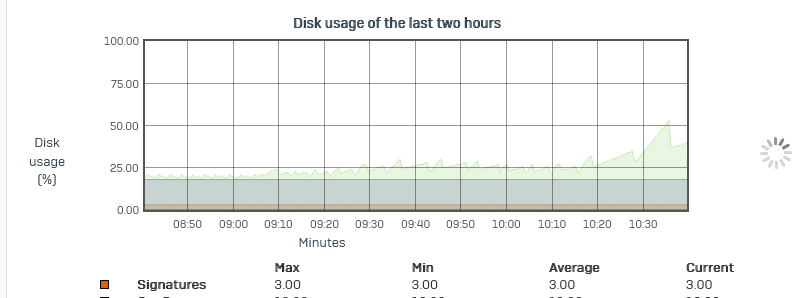

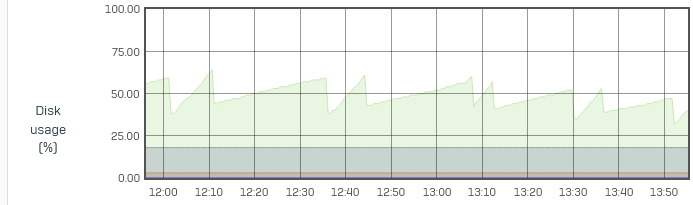

Today we've had a partial outage due to high /var partition usage.

It was flapping between 70% and over 90% in a short time.

/dev/var 179.3G 138.6G 40.7G 77% /var /dev/var 179.3G 138.8G 40.5G 77% /var /dev/var 179.3G 138.9G 40.4G 77% /var /dev/var 179.3G 139.0G 40.3G 77% /var /dev/var 179.3G 131.0G 48.3G 73% /var /dev/var 179.3G 135.7G 43.6G 76% /var /dev/var 179.3G 141.5G 37.8G 79% /var /dev/var 179.3G 141.5G 37.8G 79% /var /dev/var 179.3G 141.6G 37.7G 79% /var /dev/var 179.3G 141.6G 37.7G 79% /var /dev/var 179.3G 141.7G 37.6G 79% /var /dev/var 179.3G 141.7G 37.6G 79% /var /dev/var 179.3G 141.8G 37.5G 79% /var /dev/var 179.3G 144.4G 34.8G 81% /var /dev/var 179.3G 137.8G 41.5G 77% /var /dev/var 179.3G 137.9G 41.4G 77% /var /dev/var 179.3G 137.9G 41.4G 77% /var /dev/var 179.3G 138.0G 41.3G 77% /var /dev/var 179.3G 139.5G 39.8G 78% /var /dev/var 179.3G 146.0G 33.3G 81% /var /dev/var 179.3G 143.0G 36.3G 80% /var /dev/var 179.3G 144.3G 35.0G 80% /var /dev/var 179.3G 124.5G 54.8G 69% /var /dev/var 179.3G 124.6G 54.7G 69% /var /dev/var 179.3G 124.7G 54.6G 70% /var /dev/var 179.3G 124.8G 54.5G 70% /var /dev/var 179.3G 124.9G 54.4G 70% /var /dev/var 179.3G 125.1G 54.2G 70% /var /dev/var 179.3G 125.4G 53.9G 70% /var /dev/var 179.3G 171.1G 8.1G 95% /var /dev/var 179.3G 148.8G 30.5G 83% /var /dev/var 179.3G 155.9G 23.4G 87% /var /dev/var 179.3G 146.0G 33.3G 81% /var /dev/var 179.3G 144.5G 34.8G 81% /var /dev/var 179.3G 55.2G 124.1G 31% /var /dev/var 179.3G 55.3G 124.0G 31% /var /dev/var 179.3G 56.4G 122.9G 31% /var /dev/var 179.3G 56.4G 122.9G 31% /var /dev/var 179.3G 56.8G 122.5G 32% /var

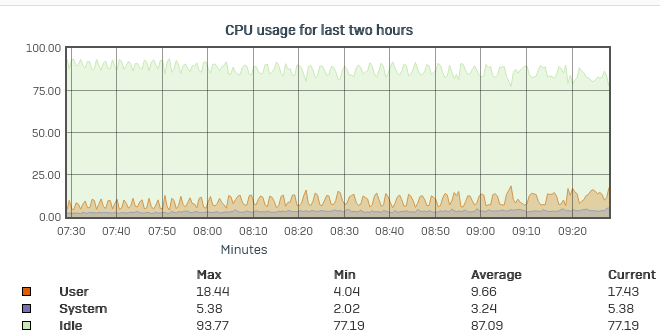

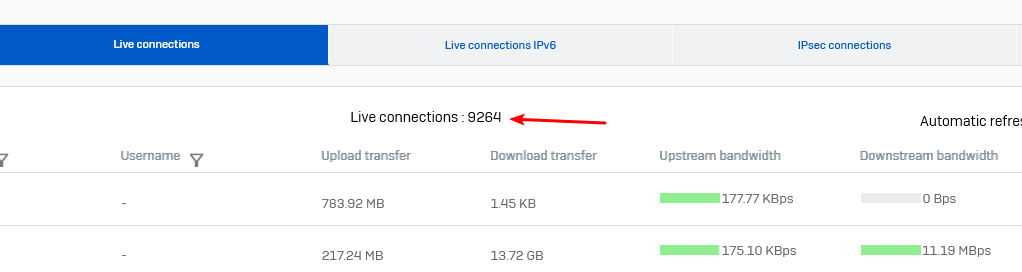

Firewall was rotating the IPS Logs at a high rate and compressing them caused additional CPU load.

The heartbeat service failed as secondary issue causing heartbeat rules not to work any more.

Also we've had a huge ammount of these IPS detections:

|

|

20583

|

BROWSER-FIREFOX Mozilla multiple location headers malicious redirect attempt

|

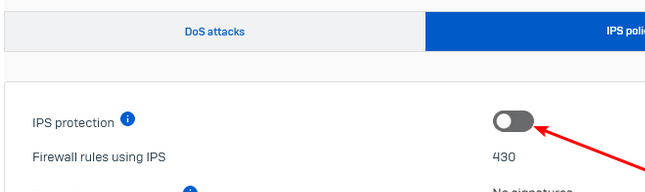

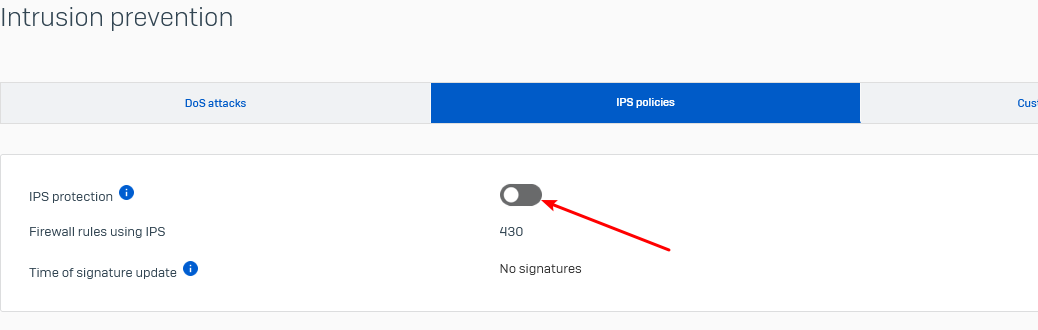

Together with Support on the phone, we flushed the report partition and rebooted the firewall after stopping IPS.

/var was at 31% then.

IPS was manually started again after the reboot.

Now it seem to me IPS is still logging at a high rate. about 1GB to the text file /log/ips.log per Minute.

Support could not find issues related to IPS.

I'm unsure.

I wonder if such a high log rate is normal for IPS and if this is OK for the SSD?

XGS4500_AM02_SFOS 21.0.0 GA-Build169 HA-Primary# ls -lhS ips.*

-rw-r--r-- 1 root root 24.4G Nov 21 11:22 ips.log-20241121_111359

-rw-r--r-- 1 root root 1.0G Nov 21 13:16 ips.log

-rw-r--r-- 1 root root 123.4M Nov 21 13:06 ips.log-20241121_130655.gz

-rw-r--r-- 1 root root 116.9M Nov 21 13:11 ips.log-20241121_131102.gz

-rw-r--r-- 1 root root 109.3M Nov 21 13:14 ips.log-20241121_131454.gz

-rw-r--r-- 1 root root 105.8M Nov 21 13:13 ips.log-20241121_131303.gz

-rw-r--r-- 1 root root 102.8M Nov 21 13:09 ips.log-20241121_130901.gz

XGS4500_AM02_SFOS 21.0.0 GA-Build169 HA-Primary# head /log/ips.log

2024-11-21T12:15:07.934223Z [25339] daq_metadata session id 5158 rev 36361 appid 106 hbappid 0 idp 14 appfltid 0

2024-11-21T12:15:07.935265Z [25340] daq_metadata session id 19208 rev 51831 appid 106 hbappid 0 idp 14 appfltid 0

Log is filling even when this is still disabled (disabled during case debug today)

Support case is 02034074

changed topic "hat" high rate -> at high rate

[bearbeitet von: LHerzog um 1:38 PM (GMT -8) am 21 Nov 2024]