I have a customer environment where the server has multiple NICs and IP addresses. The client stores very large video files on the server. Each recording studio has a dedicated 10GBps LAN link to the server and each is on a 172.19.x.x/24 Class B internal (non-internet) IP address range.

The servers main IP address with a default gateway to the Internet is on the 10.0.0.0/24 subnet. Links from the recording studios are direct and do not use a default gateway, so do not traverse the network via a router or firewall.

File transfer speeds from each studio to the server before Intercept X were around 750 MB/sec (around 7.8Gbps). When Intercept X was installed on the server, that speed fell to under 150 MB/sec.

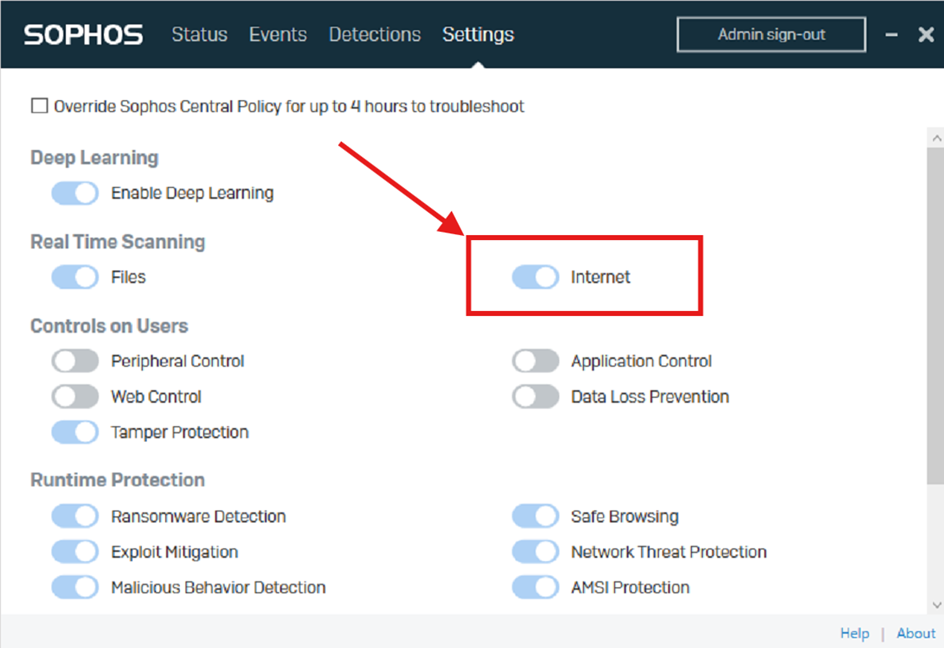

I have found that the *server* Internet option under Real Time Scanning is the singular root cause of sever file transfer speed problems.

That is, by an extensive process of trial and error, it is only by changing just this setting on just the server that we can see the speed changes.

If I disable just Internet RTS on the server and change nothing else at all, file transfers are fast. Turning it back on immediately slows the files down again.

We theorise that the Intercept X product on the server is incorrectly identifying the non-internet routable Class B 172.x.x.x IP ranges in use as "internet", hence why toggling Internet RTS on and off works to prove this is the fault.

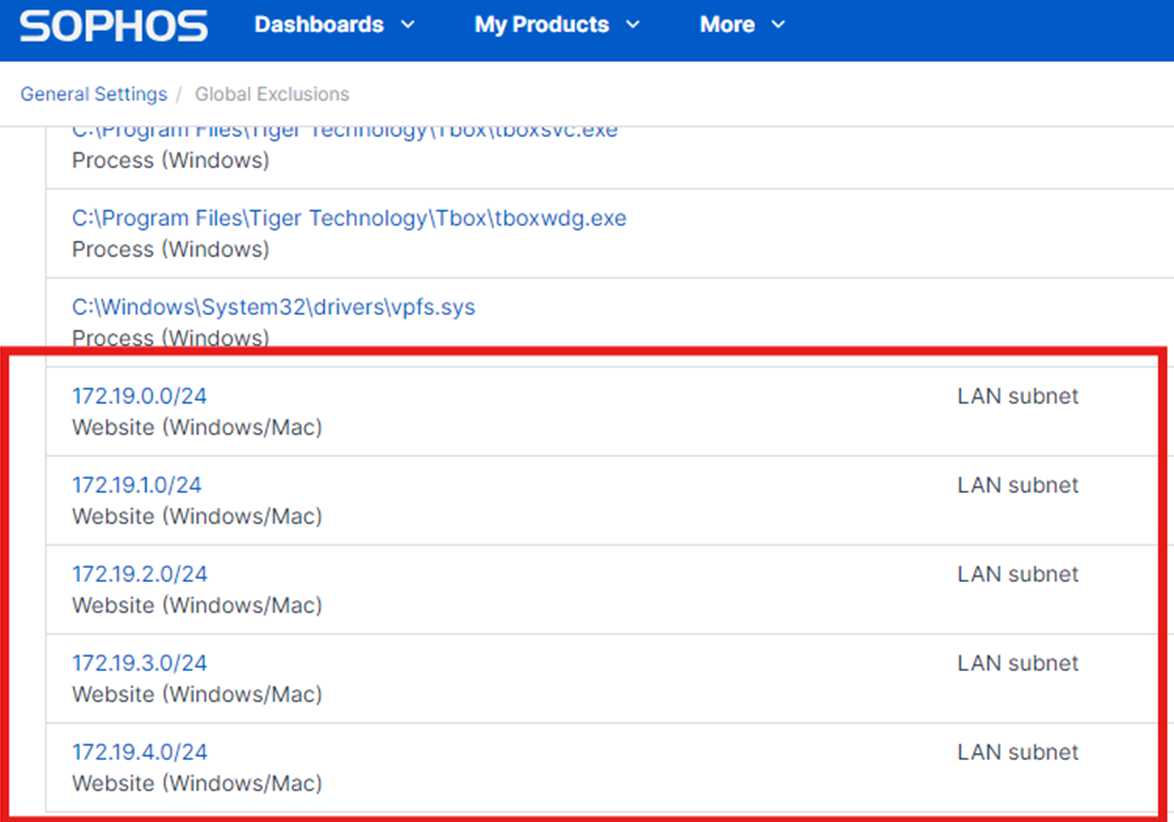

We added the subnets in question (e.g. 172.19.1.0/24) to the global exclusions, yet it still does not change the speed - the only thing that works is to disable Internet RTS. Leaving this off long term is not an acceptable option for security.

Has anyone else seen this and found a fix?

Added Tags

[edited by: GlennSen at 7:37 AM (GMT -8) on 4 Nov 2024]