I have implemented transparent HTTPS decrypt and scan. I am using the default generated signing certificate and imported that into the machines that are being scanned. This is working mostly fine, I can confirm that https traffic is being inspected successfully. I am however seeing in the logs quite a few sites being blocked reporting "error="Failed to verify server certificate", I can confirm this by going to the reported URL in the browser and I get the firewall splash page come back reporting "unable to get local issuer certificate". I will provide an example site

https://www.propertyandlandtitles.vic.gov.au

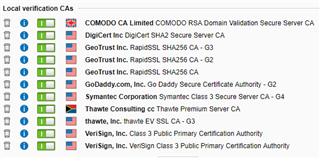

I have been progressivly resolving the issue by getting the intermediate issuing certificate and importing it as a "Local Verification CA", including those listed in Sophos article 122257, I have done this now for about 10 from all manner of providers such as below to name a few.

DigiCert Inc DigiCert SHA2 Secure Server CA

Symantec Corporation Symantec Class 3 Secure Server CA - G4

VeriSign, Inc. VeriSign Class 3 Public Primary Certification Authority

After importing these as local verification CA's it seems to resolve the problem. I am also aware I can put in exceptions to skip certificate checking but I'd rather avoid that and I certainly rather avoid doing that for all URL's as I have read some people suggest.

My question is why do I have to keep installing these intermediate CA's, that seems like a task that will never end. I raised a case with Sophos but to be honest it wasn't overly helpful, they did a rmeote session and they basically told me to keep doing what I was doing.

I am also aware of a release note for 9.5

- NUTM-6732 [Web] Certificate issue with transparent Web Proxy - "unable to get local issuer certificate"

I am running 9.503-4. Maybe the problem really hasn't been resolved or it has reocurred.

Of all the sites affected I have been there using firefox to confirm that vanilla Firefox does not have any certificate validation errors, my understanding is that the UTM's in build root verification CA's mirror those of Firefox.

I have used Digicert's SSL checker on a few of the affected sites and it has reported some issues almost certainly due to server admins incorrectly installing their certificate chains. However from my perspective if Firefox can display a page without error and without having to install anything manually I would expect the UTM to be able to deal with it also.

It almost feels like the root cause is the firewall is unable to go and follow the AIA and OCSP to retrieve any missing intermediate certs?

I've been reading the forums and this seems like it might be a recurring issue over the years, does this make the solution not viable? Does anyone have any feedback on if you are having this issue and any possible remedies. I don't really feel like spending the rest of my days hunting down and installing verification CA's. Any feedback would be greatly appreciated.

This thread was automatically locked due to age.