Hi

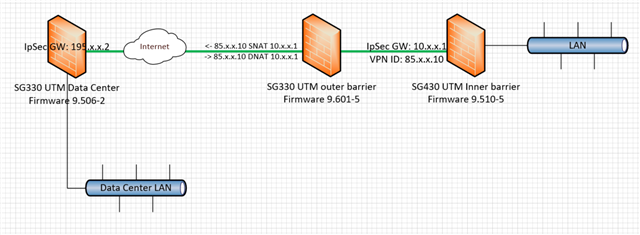

A couple of months ago an IpSec NAT-T tunnel suddenly started falling down a couple of times each day. What we do to get it up again is to disable the tunnel on both sides (we control both, or rather all 3, firewalls), wait a few minutes and enable them again. When it started we upgraded and rebooted the outer firewall (se drawing below). Then it was stable for about a month and it started doing this again.

We have verified the policies, timeouts, NTP settings and everything relevant to IpSec and they are all correct on both gateways.

The problem seems to be on the outer firewall that is doing the NAT. When we disable the tunnel on both sides and wait for the connections to timeout on the NAT firewall, the tunnel comes up again when enabling the gateways.

To complicate things, both vpn gateway have a lot of other vpn connections that works as supposed to.

Anybody have any clues?

tcpdump on eth1 on outer barrier when things are working:

09:47:36.946832 IP 85.x.x.10.4500 > 195.x.x.2.4500: UDP-encap: ESP(spi=0x440a56b0,seq=0xa95), length 1468

09:47:36.946964 IP 85.x.x.10.4500 > 195.x.x.2.4500: UDP-encap: ESP(spi=0x440a56b0,seq=0xa96), length 1468

09:47:36.946965 IP 195.x.x.2.4500 > 85.x.x.10.4500: UDP-encap: ESP(spi=0x367563fc,seq=0x5e4), length 76

09:47:36.947098 IP 85.x.x.10.4500 > 195.x.x.2.4500: UDP-encap: ESP(spi=0x440a56b0,seq=0xa97), length 1468

09:47:36.947421 IP 85.x.x.10.4500 > 195.x.x.2.4500: UDP-encap: ESP(spi=0x440a56b0,seq=0xa98), length 1468

09:47:36.947499 IP 195.x.x.2.4500 > 85.x.x.10.4500: UDP-encap: ESP(spi=0x367563fc,seq=0x5e5), length 76

09:47:36.948005 IP 195.x.x.2.4500 > 85.x.x.10.4500: UDP-encap: ESP(spi=0x367563fc,seq=0x5e6), length 76

09:47:36.948256 IP 195.x.x.2.4500 > 85.x.x.10.4500: UDP-encap: ESP(spi=0x367563fc,seq=0x5e7), length 76

09:47:36.948467 IP 85.x.x.10.4500 > 195.x.x.2.4500: UDP-encap: ESP(spi=0x440a56b0,seq=0xa99), length 1468

09:47:36.948544 IP 195.x.x.2.4500 > 85.x.x.10.4500: UDP-encap: ESP(spi=0x367563fc,seq=0x5e8), length 76

09:47:36.948620 IP 85.x.x.10.4500 > 195.x.x.2.4500: UDP-encap: ESP(spi=0x440a56b0,seq=0xa9a), length 1468

09:47:36.949581 IP 85.x.x.10.4500 > 195.x.x.2.4500: UDP-encap: ESP(spi=0x440a56b0,seq=0xa9b), length 1468

09:47:36.949885 IP 195.x.x.2.4500 > 85.x.x.10.4500: UDP-encap: ESP(spi=0x367563fc,seq=0x5e9), length 76

09:47:36.950019 IP 85.x.x.10.4500 > 195.x.x.2.4500: UDP-encap: ESP(spi=0x440a56b0,seq=0xa9c), length 284

09:47:36.951062 IP 195.x.x.2.4500 > 85.x.x.10.4500: UDP-encap: ESP(spi=0x367563fc,seq=0x5ea), length 76

09:47:36.951535 IP 195.x.x.2.4500 > 85.x.x.10.4500: UDP-encap: ESP(spi=0x367563fc,seq=0x5eb), length 76

This thread was automatically locked due to age.