Hi Folks

Apologies for resorting to asking [what's likely a noob-grade] question on the forum, but I would love to hear of any tips on how to resolve issues such as the one outlined below (I've fixed the below example, but only because I had a good idea of which domains I'd likely have to create exceptions for).

My Configuration:

I've been running UTM - in transparent and NAT mode - for over 3 years, and for the past two, I've also been using https web filtering (and this year, I've also been using the 'proxy auto configuration' feature and thus a JavaScript file to direct browsers to the UTM, thus blocking non-standard ports) and everything is working fabulously well.

I have a Ubiquiti WAP on my management LAN and I recently moved my UniFi controller onto one of my VLANs (setting appropriate firewall rules to let STUN and HTTP Proxy ports through) and again, everything is working perfectly.

The Problem:

Today, I updated UniFi and within that package, there is a button which you can depress to prompt UniFi to check for new WAP firmware, but though it showed a nice, friendly green tick about 30 seconds after depressing it (implying nothing was amiss) it didn't download the current firmware.

I cleared the http.log and pressed the firmware seeking button twice, gave it as minute, then I opened the log, but all that it showed were the below two entries:2019:06:10-11:09:44 hadrian httpproxy[5329]: id="0003" severity="info" sys="SecureWeb" sub="http" request="(nil)" function="read_request_headers" file="request.c" line="1626" message="Read error on the http handler 87 (Input/output error)"2019:06:10-11:10:43 hadrian httpproxy[5329]: id="0003" severity="info" sys="SecureWeb" sub="http" request="(nil)" function="read_request_headers" file="request.c" line="1626" message="Read error on the http handler 89 (Input/output error)"

Looking at the packetfilter.log didn't reveal anything interesting, with there being only one entry related to the UniFi controller's MAC address (not of any informative use and it also occurred 18 minutes after the above two events).

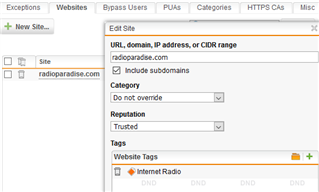

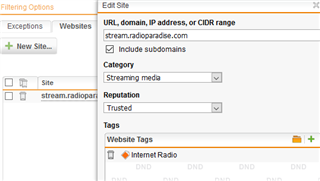

I know that Ubiquity used to have their firmwares at dl.ubnt.com, but a few months ago, they changed their main web site from ubnt.com to ui.com, so as a wild guess, I created the below two exceptions...^https?://([A-Za-z0-9.-]*\.)ubnt\.com/*^https?://([A-Za-z0-9.-]*\.)ui\.com/*

...and after again pressing the 'check for new firmware' button in Unifi, this time it downloaded the firmware (and there were no error entries in the http.log).

So, in this instance I managed to fix the problem (though only by pseudo-guessing the domains) and I was wondering whether I am missing another 'technique' which might help when investigating future such issues? I have come across a similar problem in the past (and I have seen similar entries) when trying to debug an issue with an iPad weather app (I eventually gave up and used alternative app; it generated 'useful' log entries, thus enabling me to create an exception for it).

My sincere apologies for sending you all to sleep, but if anyone can give me any hints on how to investigate similar anomalies in the future (without resorting to Wireshark) it would massively enhance my own knowledge (and you never know, perhaps it'll even enable me to help other home users on this forum, sometime down the road).

Kind regards,

Briain (GM8PKL)

PS I have SSH access set up and I'm more than happy to use terminal trickery. :)

This thread was automatically locked due to age.