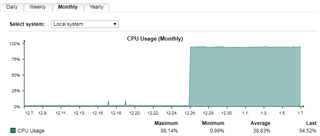

Since the up2date 9.700-5 update, CPU on our master SG210 is running >94%.

Top is showing this:

Cpu(s): 41.7%us, 47.9%sy, 0.0%ni, 5.0%id, 0.0%wa, 0.0%hi, 5.3%si, 0.0%st

HA lgo says:

2020:01:07-16:57:51 repctl[10837]: [c] standby_clone(936): 'module' => 'postgres-default'2020:01:07-16:57:51 repctl[10837]: [c] standby_clone(936): };2020:01:07-16:57:51 repctl[10837]: [c] standby_clone(936): (Attempt #:10)2020:01:07-16:58:01 repctl[10837]: [w] master_connection(2015): check_dbh: -12020:01:07-16:58:03 repctl[10837]: [i] stop_backup_mode(765): stopped backup mode at 00000001000010B0000000DD2020:01:07-16:58:03 repctl[10837]: [c] standby_clone(950): standby_clone failed: sync aborted (never executed successfully)2020:01:07-16:58:03 repctl[10837]: [e] prepare_secondary(346): prepare_secondary: clone failed2020:01:07-16:58:03 repctl[10837]: [c] prepare_secondary(360): failed to get database up, waiting for retry2020:01:07-16:58:03 repctl[10837]: [c] setup_replication(274): setup_replication was not properly executed2020:01:07-16:58:03 repctl[10837]: [i] setup_replication(278): checkinterval 300

Any remediation suggestions?

Regards,

Koen

This thread was automatically locked due to age.