First of all, I'm just a home user, so I feel like I shouldn't be complaining that much in here, or even making this post. ¯\_(ツ)_/¯

---

First Question:

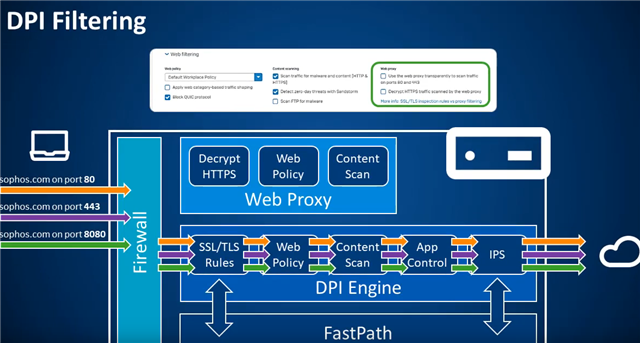

On v18 It has introduced the brand new DPI Engine, which as said by Michael Dunn, is a:

"Single high-performance streaming DPI engine with proxyless scanning of all traffic for AV, IPS, and web threats as well as providing Application Control and SSL Inspection."

The problem is in - "Single high-performance streaming DPI engine with proxyless scanning of all traffic for AV", The AV is the problem.

(I'm not a professional, so If there's mistakes I'm sorry, also, please tell me if there is.)

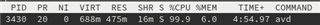

While on v17.5, also on v18, If you use the Legacy Web Proxy, the service "avd" would be used as AV.

In my understatement, the new DPI Engine which uses Snort as It service, would also use it for AV, but, but while using the DPI Engine you can see the "avd" service being spawned, and used by XG for AV scanning.

This is not a issue, well I'm not a Sophos Dev, so in my opinion this is just weird, again, It's not a issue.

Playing on my Home setup, the main noticeable difference between using Web Proxy and the DPI Engine (While using HTTP(s) scan.) Is throughput, the DPI Engine is much faster than the Web Proxy.

Now the "issue"; It's CPS, both Web Proxy and "Xstream SSL Inspection" can handle the same amount of CPS with the AV, which in my setup is 750~ (Wrong, check Edits.). So the main "issue" here is the AV.

CPS = Connections per Second, here it's just HTTPS.

Edit: I've shouldn't have done this testing at midnight, I has way too sleepy for this, The numbers on the DPI Engine is correct, but on Web Proxy is slower than I wrote before, now It makes more sense.

Edit: Also, good job for the Sophos Devs, the difference between the Legacy Web Proxy and the new DPI Engine is impressive.

Edit 2: Some additional information about this, which makes the difference between Web Proxy and DPI Engine even more impressive, I've used TLS v1.3 with the DPI Engine, while on Web Proxy has TLS v1.2.

Edit 3: Web Proxy has using this Chiper/Auth combination: TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384, 256 bit Keys, TLS v1.2;

Edit 3: DPI Engine has using this Chiper/Auth combination: TLS_AES_256_GCM_SHA384, 256 bit Keys, TLS v1.3;

Edit 4: I will try to force TLS v1.2 on the DPI Engine to see if there's even a higher difference.

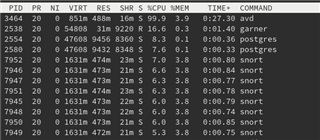

| XG v18 EAP3 Refresh-1 | Web Proxy TLS v1.2 | DPI Engine TLS v1.2 | DPI Engine TLS v1.3 |

| CPS with/AV |

275~ |

980~ | 750~ |

| CPS without/AV | 430~ | 4700~ | 4100~ |

| Latency >90%/Connections | 0.070/Sec | 0.014/Sec | 0.101/Sec |

Disabling HTTP(s) scanning, and there you go, more than 5x the CPS while using the DPI Engine. It feels like the current XG AV is holding the DPI Engine back, and not letting it to have the performance that It's capable of.

After all this *** writing, my question is, is this expected? Will XG keep using "avd" as the AV for HTTP(s), IMAP, and so on? I'm not saying It's wrong, or bad, again, It's just weird. Also if It is, then why - "Single high-performance streaming DPI engine with proxyless scanning of all traffic for AV", In my understatement of what has said on this, shouldn't Snort be also taking care of AV?

---

Second Question:

Why the hell "avd" uses 99.9% of a single-core while scanning .txt files?

This becomes a issue when your running any linux distro, which the package manager downloads .txt files to know if there's any package to upgrade. The CPU usage of a single core goes all the way up to 100%.

A single "pacman -Syu" which at first download 4x .txt files, can take up to 45 seconds, and It's only at limit 6MB of total size, (I'm on a 400/200Mbit/s WAN, and the package manager mirror is capable to push my link to it's limits.)

This doesn't happen with any other kind of file format, hell, .exe scanning feels like it's instant compared to .txt

---

Third Question:

Why "avd" is a single-core service?

---

That's It.

Again, It feels like all of this is all expected, but I'm just a home user, so I feel like I shouldn't be complaining that much in here.

Also 750~ CPS is more than sufficient for a Home network.

Thanks.