Disclaimer: This information is provided as-is for the benefit of the Community. Please contact Sophos Professional Services if you require assistance with your specific environment.

______________________________________________________________________________________________________________________________________

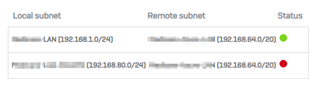

This case study below describes a scenario that has IPsec VPN tunnel site to site VPN between Sophos Firewall (On-Premises) and Azure, with 2 SA’s established, and suddenly one of them get disconnected, and investigation steps that as taken to took to find the root cause of the disconnections events.

Sophos Firewall IP is 285.54.258.149 (Response only)

Azure IP: 51.136.198.59 (initiator)

First step - check if the SA’s status has changed, this can be seen looking at the IPsec logs Charon.log (In debug)* by doing the following command:

NOTE: Enable Debug mode to IPsec Logs : "service strongswan:debug -ds nosync" to disable the debug you most run the same command again

1- Filter the logs with the following syntax “cat Charon.log | grep conn_name”

2019-10-23 20:19:21 23[APP] [COP-UPDOWN][STATUS] (db_status_update) conn_name: azuregw count: 2

2019-10-23 20:22:21 07[APP] [COP-UPDOWN][STATUS] (db_status_update) conn_name: azuregw count: 2

2019-10-23 20:25:21 19[APP] [COP-UPDOWN][STATUS] (db_status_update) conn_name: azuregw count: 2

2019-10-23 20:28:21 25[APP] [COP-UPDOWN][STATUS] (db_status_update) conn_name: azuregw count: 2

2019-10-23 20:31:21 10[APP] [COP-UPDOWN][STATUS] (db_status_update) conn_name: azuregw count: 2

2019-10-23 20:34:21 32[APP] [COP-UPDOWN][STATUS] (db_status_update) conn_name: azuregw count: 2

2019-10-23 20:37:21 27[APP] [COP-UPDOWN][STATUS] (db_status_update) conn_name: azuregw count: 2

2019-10-23 20:40:21 28[APP] [COP-UPDOWN][STATUS] (db_status_update) conn_name: azuregw count: 2

2019-10-23 20:43:21 20[APP] [COP-UPDOWN][STATUS] (db_status_update) conn_name: azuregw count: 1

2019-10-23 20:46:21 30[APP] [COP-UPDOWN][STATUS] (db_status_update) conn_name: azuregw count: 1

2019-10-23 20:49:21 14[APP] [COP-UPDOWN][STATUS] (db_status_update) conn_name: azuregw count: 1

2019-10-23 20:52:21 27[APP] [COP-UPDOWN][STATUS] (db_status_update) conn_name: azuregw count: 1

2019-10-23 20:55:21 30[APP] [COP-UPDOWN][STATUS] (db_status_update) conn_name: azuregw count: 1

2019-10-23 20:58:21 16[APP] [COP-UPDOWN][STATUS] (db_status_update) conn_name: azuregw count: 1

2019-10-23 21:01:21 09[APP] [COP-UPDOWN][STATUS] (db_status_update) conn_name: azuregw count: 1

2019-10-23 21:04:21 19[APP] [COP-UPDOWN][STATUS] (db_status_update) conn_name: azuregw count: 1

Note that the count was showing 2 (remember it has 2 SA’s), and then after 2019-10-23 20:43:21 start show count 1, which proves that the second SA got disconnected, otherwise will be always count 2

Searching the reason why the disconnection happen digging into the charon.log (with debug enabled):

Narrowed down the logs a bit before that timestamp and I could find by filtering the reference “TS” inside of the same log file (Charon.log in debug) that means Traffic Selector, I could find the moment that the SA is disconnect. Note that the Azure side, sends a response that is actually a solicitation to REKEYING the current installed TS:

2019-10-23 20:40:07 15[CHD] <aztest1-1|143> SPI 0xc08f3a27, src 51.136.198.59 dst 285.54.258.149

2019-10-23 20:40:07 15[CHD] <aztest1-1|143> registering outbound ESP SA

2019-10-23 20:40:07 15[CHD] <aztest1-1|143> SPI 0xe37df272, src 285.54.258.149 dst 51.136.198.59

2019-10-23 20:40:07 15[CHD] <aztest1-1|143> CHILD_SA azuregw-1{132} state change: INSTALLING => INSTALLED

2019-10-23 20:40:07 15[IKE] <aztest1-1|143> inbound CHILD_SA azuregw-1{132} established with SPIs c08f3a27_i e37df272_o and TS 192.168.1.0/24

2019-10-23 20:40:07 15[CHD] <aztest1-1|143> CHILD_SA azuregw-1{131} state change: INSTALLED => REKEYING

2019-10-23 20:40:07 15[CHD] <aztest1-1|143> CHILD_SA azuregw-1{131} state change: REKEYING => REKEYED

2019-10-23 20:40:07 15[ENC] <aztest1-1|143> generating CREATE_CHILD_SA response 6269 [ SA No TSi TSr ]

2019-10-23 20:40:07 15[NET] <aztest1-1|143> sending packet: from 285.54.258.149[500] to 51.136.198.59[500] (208 bytes)

2019-10-23 20:40:07 04[NET] sending packet: from 285.54.258.149[500] to 51.136.198.59[500]

2019-10-23 20:40:07 03[NET] received packet: from 51.136.198.59[500] to 285.54.258.149[500] on Port2

2019-10-23 20:40:07 03[NET] waiting for data on sockets

2019-10-23 20:40:07 11[NET] <aztest1-1|143> received packet: from 51.136.198.59[500] to 285.54.258.149[500] (80 bytes)

2019-10-23 20:40:07 11[ENC] <aztest1-1|143> parsed INFORMATIONAL request 6270 [ D ]

2019-10-23 20:40:07 11[IKE] <aztest1-1|143> received DELETE for ESP CHILD_SA with SPI 6d8c6dba

2019-10-23 20:40:07 11[IKE] <aztest1-1|143> closing CHILD_SA azuregw-1{131} with SPIs c300308c_i (532547358 bytes) 6d8c6dba_o (35133355 bytes) and TS 192.168.1.0/24 === 192.168.64.0/20

2019-10-23 20:40:07 11[IKE] <aztest1-1|143> sending DELETE for ESP CHILD_SA with SPI c300308c

2019-10-23 20:40:07 11[CHD] <aztest1-1|143> CHILD_SA azuregw-1{131} state change: REKEYED => DELETING

2019-10-23 20:40:07 11[IKE] <aztest1-1|143> CHILD_SA closed

2019-10-23 20:40:07 11[CHD] <aztest1-1|143> CHILD_SA azuregw-1{131} state change: DELETING => DELETED

Conclusion:

The initiator side (Azure), sends a rekey request, however the SA does not re-establish after the rekey, then Sophos Firewall honor by deleting the TS as requested. In this particular scenario, the issue lays on the Azure side, which will require further investigation on the Azure logs.

______________________________________________________________________________________________________________________________________

Edited TAGs

[edited by: Raphael Alganes at 7:57 AM (GMT -7) on 17 Sep 2024]