I have a network at home with 3 VLANS and wired into an Atom based appliance running Sophos XG Home. The traffic on the network is a mixture of IOT, Windows 10, Server 2022 and such. Netflix, Amazon Prime, etc. for family internet usage.

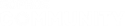

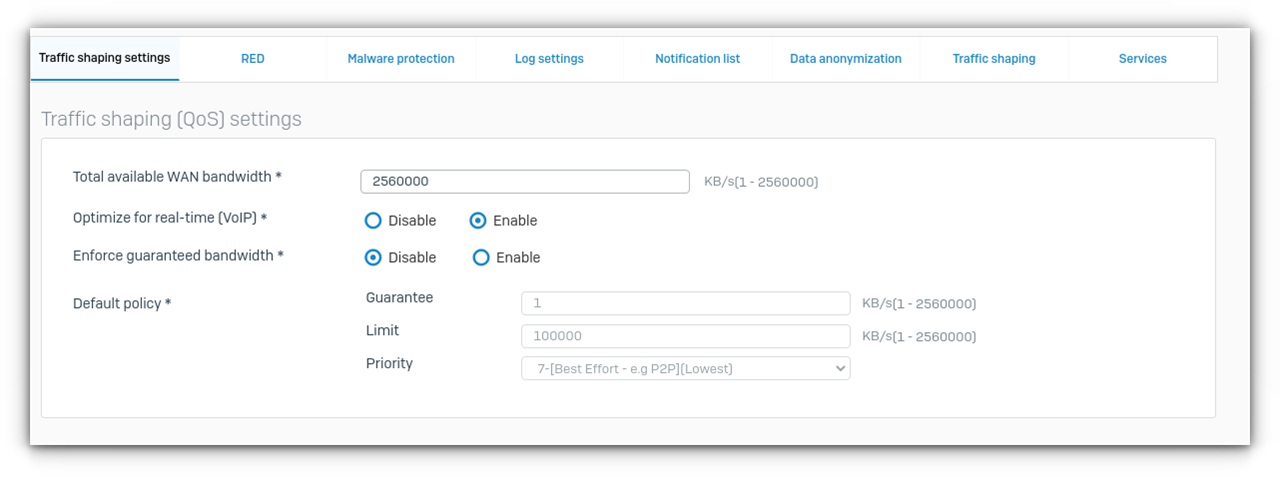

Connection is Virgin 100/10 cable connection, are there general QOS recommendations for applying against rules etc? Bufferbloat is a problem on the connection, but traffic shaping rules haven't been enabled as of yet.

Speed isn't the issue, it's latency..

This thread was automatically locked due to age.