Hello all,

Due to increased spam traffic with malicious links, we need to dynamically block certain URLs. With policy helpdesk, I can easily track what filter action and policy took place for particular user. I am conviced I blacklisted the webpage in the right place and yet, the user still can access the webpage. Below on the screenshots is outcome from particular policy, filter action and policy helpdesk test. Of course I had to cover texts that could lead to de-anonymization of the firewall, where screenshots were taken (hence there are some white rectangles in some parts of the screenshots). The images posted to my post have bad quality (you cannot read the setings), I therefore used external webpage for image hosting

What am I doing wrong?

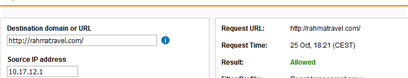

Filter Action:

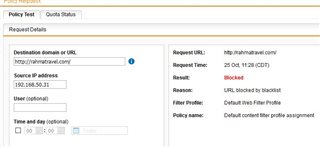

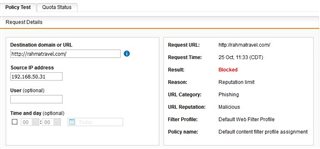

Policy test:

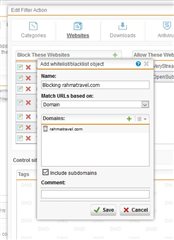

Profile:

Thank you in advance, take care.

This thread was automatically locked due to age.