Hello,

I am using UTM 9.503-4 in a home environment. I would like some internal hosts to be bypassed in Web Filtering, so have added them to the "Skip Transparent Mode Source Hosts/Nets", and checked "Allow HTTP/S traffic for listed hosts/nets". However, the policy helpdesk shows that the bypasses clients are still being filtered, and many sites using SSL work erratically or not at all. Turning off Web Filtering completely will usually resolve the issue, and allow traffic to traverse using MASQ and Firewall rules.

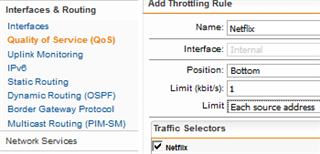

My main reason for using Web Filtering is for Quotas on Youtube and Netflix, but neither of these work, as the quota never cuts off the connection once established (probably because I am not proxying SSL, as this breaks too many sites). So I am then limited to using time ranges, in which case there doesn't seem to be any advantage to using Web Filtering - I can just use regular L3 rules and time ranges. Is it futile to use the Web Filter in transparent mode without a trusted SSL cert on the clients, since most traffic is SSL these days?

I was hoping for something like the Palo Alto level of application awareness and control, but I guess that's not going to happen for free.

This thread was automatically locked due to age.